Smol models can add (better). First experiment in LLM finetuning

Since the holidays, I’ve been playing around with LLMs (as has been everyone and their dog). Given the recent R1 model and paper showing off the GRPO results, I decided it would be interesting to give it a try.

GRPO is an interesting take on reinforcement learning: all of the difficulties in creating value functions is sidestepped completely in favor of duplicating the world: “in situation X, randomly generate N alternatives; look at the outcomes and teach the network based on the relative performance.

It turned out to be way easier than I thought. Huggingface trl is right on the cutting edge, an implementation was added a few days ago. Note that at the moment (2025-01), the pypi release still doesn’t have GRPO, you need to install straight from github.

Nothing to do but to just dive in. Continuing in the huggingface ecosystem, I picked their SmolLM as a base; the 360M model is small enough to train on my 3080 Ti, large enough to be doing something interesting.

The code is straightforward; the code blocks below are pasted from the jupyter notebook I used. First,

%pylab inline

import bitsandbytes

import numpy as np

import pandas as pd

import peft

import re

import torch

import transformers

import trl

from datasets import Dataset

model_name = "HuggingFaceTB/SmolLM-360M"

device = "cuda"

# It's not a chat model; these workarounds are needed to avoid errors

tokenizer.pad_token_id = tokenizer.eos_token_id

tokenizer.pad_token = tokenizer.eos_token

We generate a bunch of data in the format "So <number> + <number> =", and a reward function that checks the continuation for correctness. To make things easy on the algorithm, the reward function is smooth, granting incremental partial credit for getting closer to the actual number.

def create_addition_examples(seed: int, n: int):

"""Creates a list of simple addition problem prompts."""

rng = np.random.default_rng(seed)

return [dict(

prompt=f"So {a} + {b} ="

) for a, b in rng.integers(1000, size=(n, 2))]

def calculate_rewards(prompts: list, completions: list) -> list:

"""Returns reward scores for a batch of addition problem solutions."""

return [calculate_single_reward(p, c) for p, c in zip(prompts, completions)]

def calculate_single_reward(prompt: str, completion: str) -> float:

"""Scores an addition problem solution based on its accuracy (0-100)."""

full_response = prompt + completion

match = re.match(r"So (\d+) \+ (\d+) = (\d+).*", full_response)

if not match:

return 0.0

num1, num2, answer = [int(v) for v in match.groups()]

return 100.0 / (1.0 + abs(answer - (num1 + num2)))

dataset = Dataset.from_list(create_addition_examples(42, n=10_000)).train_test_split(test_size=200)

Add some code to evaluate how we’re doing (at the moment). Normally we’d use some readymade hf code for this, but at the moment the GRPO algorithm doesn’t work well with evaluations ( it’s not yet even hot off the presses, it’s in the press at the very moment…)

def evaluate_model(model, dataset, tokenizer, device):

"""Evaluates model predictions on addition problems."""

results = []

for example in dataset["test"]:

inputs = tokenizer(

example["prompt"],

return_tensors="pt"

).to(device)

outputs = model.generate(

**inputs,

max_new_tokens=6,

pad_token_id=tokenizer.eos_token_id

)

input_length = inputs["input_ids"].shape[-1]

completion_tokens = outputs[0, input_length:].cpu().numpy().tolist()

completion = tokenizer.decode(completion_tokens)

results.append(dict(

prompt=example["prompt"],

completion=repr(completion),

reward=calculate_single_reward(example["prompt"], completion)

))

return pd.DataFrame.from_records(results)

def measure(evaluation_results):

display(evaluation_results.iloc[0:20])

hist(evaluation_results.reward, np.linspace(0, 100, 50))

# Run evaluation

evaluation_results = evaluate_model(model, dataset, tokenizer, device)

measure(evaluation_results)

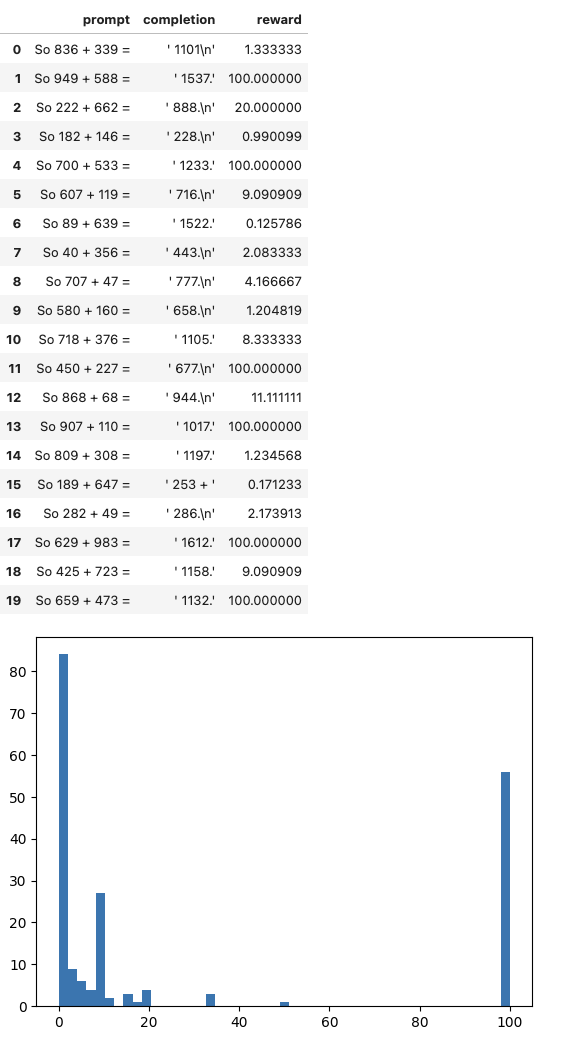

So, this is how the untrained model does on the reward. 100 means correct anwer, and the reward goes down from there to zero (no number at all).

model = transformers.AutoModelForCausalLM.from_pretrained(model_name).to(device) # Reload to not accidentally continue from already trained

grpo_config = trl.trainer.grpo_trainer.GRPOConfig(

# beta=0.1,

per_device_train_batch_size=8,

output_dir="/tmp/adder",

do_train=True,

do_eval=False,

learning_rate=1e-3,

logging_steps=25,

gradient_accumulation_steps=4,

max_completion_length=6,

eval_on_start=False,

label_names=[],

save_steps=50,

weight_decay=0.001,

num_train_epochs=2,

)

trainer = trl.trainer.grpo_trainer.GRPOTrainer(

model=model,

reward_funcs=[calculate_rewards],

args=grpo_config,

train_dataset=dataset["train"],

eval_dataset=dataset["test"],

peft_config=peft.LoraConfig(task_type="CAUSAL_LM"),

processing_class=tokenizer,

)

trainer.train()

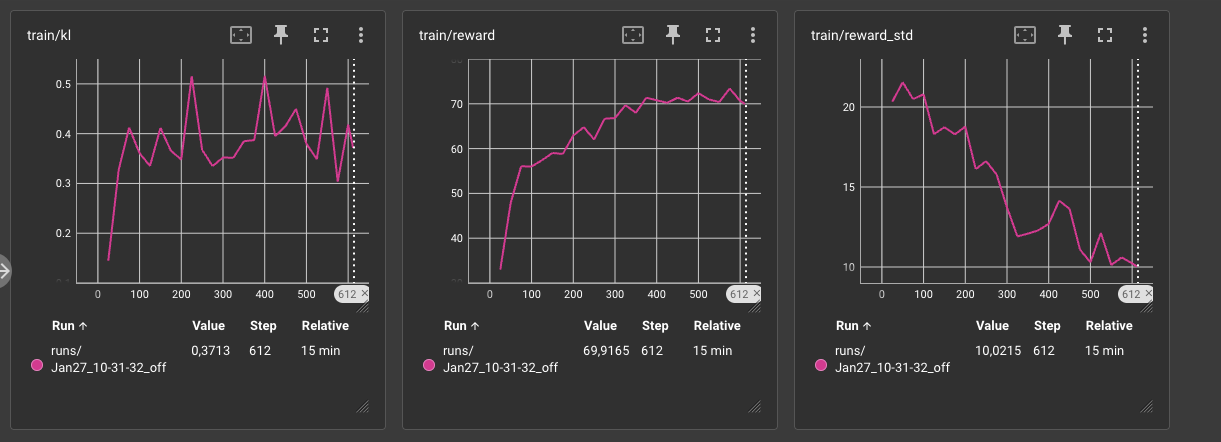

We run training (about 15 minutes!) and…

…it learns to add. Or actually remembers how to add. Or figures out that what it’s supposed to do in this situation is to add correctly.

There’s no way this small amount of training data would actually teach it to add better; but during training it has seen countless examples of sums. Some done correctly, some incorrectly. Depending on the context, the correct or incorrect results (or continuing after the “=” token with a non-number) has a probability. What we’re roughly doing (as far as I understand the situation) is basically adjusting that probability.

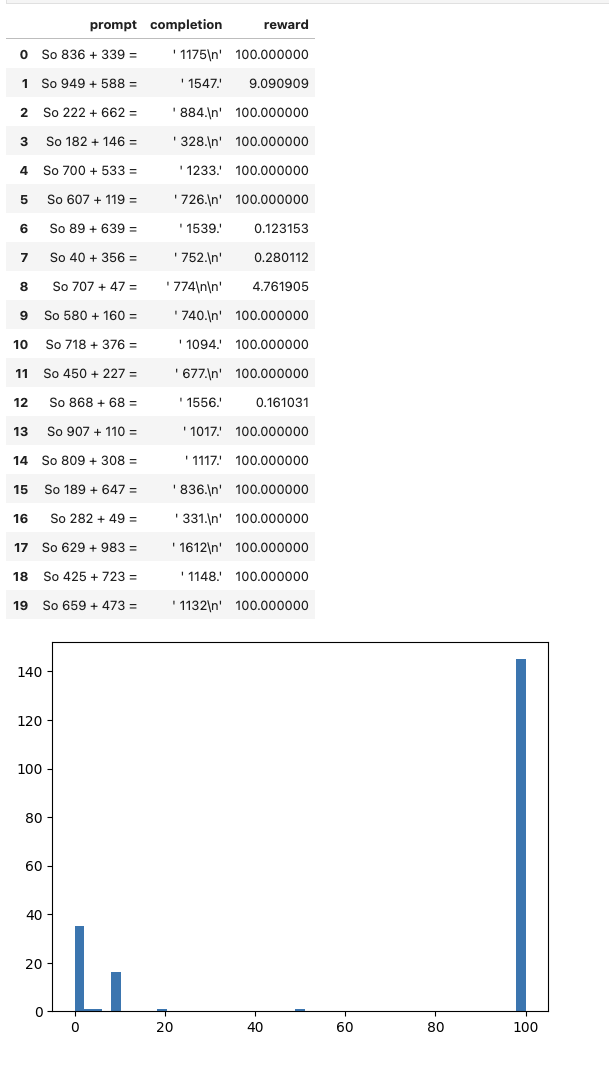

In the learned state, the evaluation output looks pretty different.

trained_evaluation_results = evaluate_model(model, dataset, tokenizer, device)

measure(trained_evaluation_results)

Note that the KL divergence term in the loss function ensures it doesn’t go all the way towards the numbers but drives it to a reasonable compromise. And the erroneous results seem to mostly vanish.

There are a number of logical next steps from here:

-

Evaluate the performance of the untrained and trained model on some standard benchmarks to ensure that we don’t accidentally make it do a lot worse on other problems

-

Test if we can integrate this with the instruction following version

-

Test the adding on prompts of other format than the “So “ used above

- Indeed, make the training set broader

-

Test if the new (remembered) addition capability generalizes to subtraction automatically (I can see that going either way, depending on how the model represents things internally)

-

…

But on the whole, I think we got what we came for here - a quick first touch with GRPO and LM finetuning.

Please let me know if you decide to try this and one of the next steps!

Enjoy Reading This Article?

Here are some more articles you might like to read next: